List of individual tasks

- Task 1: Training basic MLP

- Task 2: Evaluation

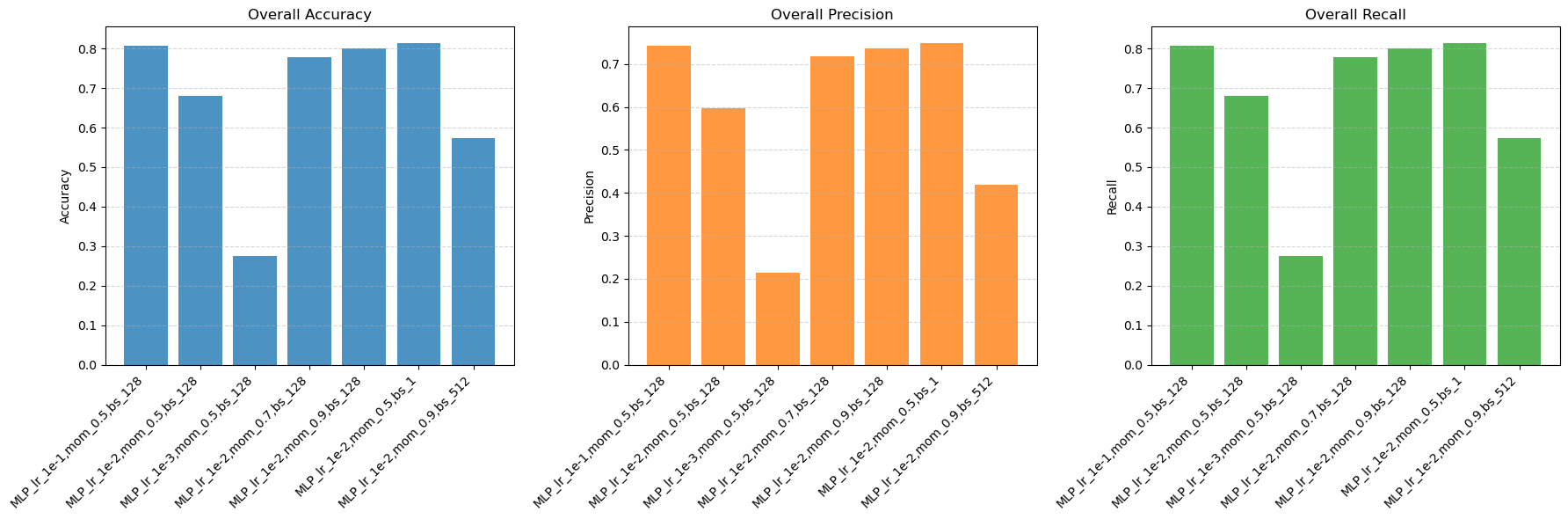

- Task 3: Training basic MLP modifying hyperparame…

- Task 4: Comparison

- Task 5: CNN architecture

- Task 6: Training a basic CNN

- Task 7: Evaluation

- Task 8: Training a basic CNN modifying hyperpara…

- Task 9: Evaluation

- Task 10: Evaluation

- Task 11: Adding a hidden layer

- Task 12: Compare performance

- Task 13: Compare architectures

- Task 14: Compare performance

- Task 15: Update architecture

- Task 16: Adding a convolutional layer

- Task 17: Compare architectures

- Task 18: Compare architectures

- Task 19: Additional improvements