Note

The elementwise operations also exist for matrices and tensors. The elementwise multiplication is called the Hadamard product.

We assume you have read the reading material for this week prior to starting the tutorial. This tutorial covers basic terms in linear algebra and their implementation in NumPy and includes:

Define:

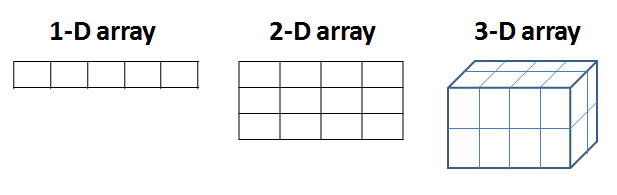

NumPy represents a vector as a 1-D array, a matrix as a 2-D array (array of arrays), and an $n$-tensor as a $n$-dimensional array (e.g. A 3D-tensor is an array of 2D arrays etc.):

The following cells demonstrates how to create Numpy arrays:

# Import the numpy lib

import numpy as np# Create a vector with 4 elements:

a = np.array([1, 6, 3, 1])

print(a)[1 6 3 1]

# Create a 2x4 matrix:

A = np.array([[1, 42, 6, 4], [2, 6, 87, 2]])

print(A)[[ 1 42 6 4] [ 2 6 87 2]]

# Print the size of the matrix

print(A.shape)(2, 4)

# A 2x2x4 tensor:

T = np.array([[[1, 23, 3, 4], [42, 6, 7, 87]], [[9, 3, 11, 2], [2, 22, 15, 101]]])

print(T)[[[ 1 23 3 4] [ 42 6 7 87]] [[ 9 3 11 2] [ 2 22 15 101]]]

# Print the size of the tensor

print(T.shape)(2, 2, 4)

A vector multiplied by a scalar:

a = np.array([3, 22, 3, 21])

a * 2Inner product (dot product): The inner product (see lecture slides for details) of two vectors $a$ and $b$ of dimension $n$:

$$ a\cdot b =\sum_i^N a_ib_i = a^\top b $$The inner product can be calculated using the Numpy function np.dot(a,b)

:

# Create 2 vectors v1 and b

a = np.array([1, 2, 3, 42])

b = np.array([2, 3, 4, 5])

# calculate the dot product

np.dot(a, b)Summation and subtraction of 2 vectors: Elementwise summation/subtraction/multiplication

The elementwise operations also exist for matrices and tensors. The elementwise multiplication is called the Hadamard product.

# Create 2 vectors

a = np.array([1, 2, 3, 42])

b = np.array([2, 3, 4, 5])

print("a + b is : \n", a + b)

print("a - b is : \n", a - b)

print("a * b is : \n", a * b)a + b is : [ 3 5 7 47] a - b is : [-1 -1 -1 37] a * b is : [ 2 6 12 210]

The Euclidean distance (aka. $L2$-norm) of a vector $a$ is the square root of the inner product $$ |a| =\sqrt{\sum_i^N a_i^2 }= \sqrt{a^\top a} $$ , where $a^\top$ is the transpose of $a$.

Later in the course you will see examples of other norms.

# Inner product of v1 with itself

a_a_inner_product = np.dot(a, a)

Euc_len_a = np.sqrt(a_a_inner_product)

print('Euclidean distance of v1:', Euc_len_a)Euclidean distance of v1: 42.16633728461603

The Euclidean distance can be calculated using np.linalg.norm()

print('Euclidean distance of v1:', np.linalg.norm(a))Euclidean distance of v1: 42.16633728461603

Vectors $a$ and $b$ are considered orthogonal (perpendicular) if $ a^\top b=0$.

Recall that the dot product is related to the angle between vectors $a$ and $b$:

$$ \cos(\theta) = \frac{a \cdot b}{\|a\| \|b\|} $$# Orthognonal vectors

a = np.array([1, -2, 4])

b = np.array([2, 5, 2])

print(a.dot(b)) # Alternative way to calculate dot product. Equivalent to (np.dot(a,b))0

Orthonormal vectors: are orthogonal and have unit length.

In the cell below, orthonormal vectors $a$ and $b$ are calculated by dividing each by their length to ensure orthogonality and normality.

a_normalized = a / np.linalg.norm(a)

print('a_normalized:', a_normalized)

b_normalized = b / np.linalg.norm(b)

print('b_normalized:', b_normalized)

# Inner product of orthonormal vectors

print('The inner product of a_normalized and b_normalized: \n', a_normalized.dot(b_normalized))a_normalized: [ 0.21821789 -0.43643578 0.87287156] b_normalized: [0.34815531 0.87038828 0.34815531] The inner product of a_normalized and b_normalized: 0.0

The following section provides an overview of important matrix properties and matrix compositions.

A Square matrix: is a two-dimensional array with the same number of rows and columns $N \times N$ .

# Create a square random matrix

A = np.random.randint(5, size=(3, 3))An Identity matrix is a square matrix that contains ones on the diagonal and zeros elsewhere.

The NumPy function np.identity(N)

creates an identity matrix with N elements on the diagonal.

I3 = np.identity(3)

print(I3)[[1. 0. 0.] [0. 1. 0.] [0. 0. 1.]]

I4 = np.identity(4)

print(I4)[[1. 0. 0. 0.] [0. 1. 0. 0.] [0. 0. 1. 0.] [0. 0. 0. 1.]]

Diagonal matrix: is a matrix with non-zero elements in the diagonal and zeroes elsewhere.

The NumPy function np.diag(a)

creates a 2D array (diagonal matrix) given a 1D array (list/vector containing diagonal elements) as input.

# Create an array:

v = np.array([3., 2., 5.])# Create a matrix with elements of v on the diagonal:

D = np.diag(v)

print(D)[[3. 0. 0.] [0. 2. 0.] [0. 0. 5.]]

Matrix multiplication of two matrices can be conceptualized as a series of consecutive inner products between corresponding row-column pairs.

Consider the matrices A1 and A2:

A1 = np.array([[1, 3, 0], [4, 4, 4]])

A2 = np.array([[2, 2], [0, 4], [2, 3]])In Numpy, matrices (and other arrays) are zero-indexed like regular Python lists. This is in contrast to mathematical notation, which is one-indexed.

In the following, matrix multiplication is demonstrated as a series of inner products. The cell below shows matrix multiplication of A1

and A2

using the dot

function in Numpy:

print(np.dot(A1, A2))[[ 2 14] [16 36]]

The first element ($2$) of the result is calculated as the inner product of the first row of A1

and the first column of A2

:

# A1 first row, A2 first column : position [1,1]

print(np.dot(A1[0, :], A2[:, 0]))2

Similarly, the other elements can be calculated using the appropriate combinations of rows A1

and columns in A2

:

# A1 second row, A2 first column : position [2,1]

print("A1 second row, A2 first column:", np.dot(A1[1, :], A2[:, 0]))

# A1 first row, A2 second column : position [1,2]

print("A1 first row, A2 second column:", np.dot(A1[0, :], A2[:, 1]))

# A1 second row, A2 second column : position [2,2]

print("A1 second row, A2 second column:", np.dot(A1[1, :], A2[:, 1]))A1 second row, A2 first column: 16 A1 first row, A2 second column: 14 A1 second row, A2 second column: 36

Multiplying a matrix A (from either left or right) with the identity matrix yields the matrix A. Thus, $$ AI = A = IA. $$ As an example:

# Create 3x3 matrix A with random values between 0 and 4.

A = np.random.randint(5, size=(3, 3))

# Create 3x3 identity matrix.

I = np.identity(3)

# Multiply the matrices

R = np.dot(A, I)

print("A:\n", A, "\nI:\n", I, "\nmultiplication result:\n", R)A: [[4 2 3] [1 0 1] [2 0 1]] I: [[1. 0. 0.] [0. 1. 0.] [0. 0. 1.]] multiplication result: [[4. 2. 3.] [1. 0. 1.] [2. 0. 1.]]

Matrix multiplication can be performed using the @

operator. The example below demonstrates that the use of the @

operator yields identical results as above:

R2 = A @ I

print(R2)[[4. 2. 3.] [1. 0. 1.] [2. 0. 1.]]

Recall that determinants are utilized when solving linear systems of equations, and a determinant of 0 indicates that the function does not have an inverse.

Use np.linalg.det()

to calculate the determinant of a matrix.

# Example matrices

A = np.array([[1, 1, 1], [0, 2, 5], [2, 5, -1]])

B = np.array([[0, 0, 0], [0, 2, 5], [2, 5, -1]])

# Calculate the determinant

print("det(A):", np.linalg.det(A))

print("det(B):", np.linalg.det(B))det(A): -21.0 det(B): 0.0

The function np.linalg.inv(A)

can be used to calculate the inverse of a matrix.

The function returns an error when the matrix is singular (i.e. the determinant is zero).

print("A^-1:", np.linalg.inv(A))

print("B^-1:", np.linalg.inv(B)) # This line should give an error because B is singularA^-1: [[ 1.28571429 -0.28571429 -0.14285714] [-0.47619048 0.14285714 0.23809524] [ 0.19047619 0.14285714 -0.0952381 ]]

The matrix A multiplied by its inverse yields the identity matrix:

I = np.dot(A, np.linalg.inv(A))

print(I)[[1. 0. 0.] [0. 1. 0.] [0. 0. 1.]]

The result of the calculation above may be slightly off. This is due to the limitations of floating point numbers.

# Create a diagonal matrix

A = np.diag([5, 2, 3])

print(A)[[5 0 0] [0 2 0] [0 0 3]]

The determinant of a diagonal matrix is the product of the elements on the diagonal.

print(np.linalg.det(A))29.99999999999999

The inverse of a diagonal matrix is the reciprocal of each element on the diagonal.

print(np.linalg.inv(A))[[0.2 0. 0. ] [0. 0.5 0. ] [0. 0. 0.33333333]]

This concludes the tutorial.