This exercise is about finding the pupil centers in videos of eyes and is a prerequisite for the first mandatory assignment. You will furthermore use the data in subsequent exercises.

After the recording session you were provided with a folder containing your .mp4

recordings as well as the corresponding patterns and screen coordinates. Furthermore you have also been provided with two folders test_subject_1

and test_subject_3

containing similar data, collected from two different test subjects. test_subject_1

and test_subject_3

are also located in the folder test_subjects

.

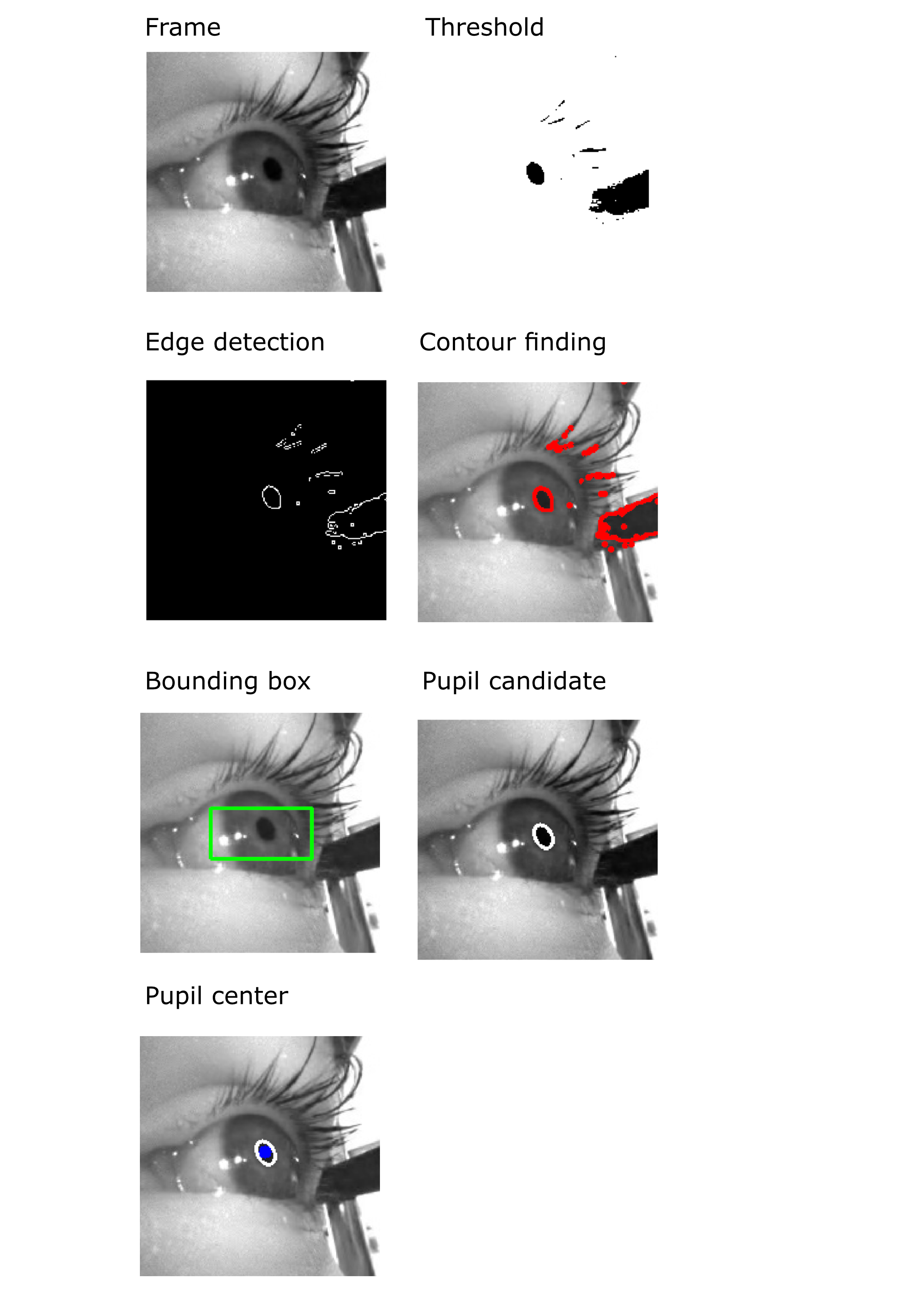

This notebook contains necessary functions to visualize and preprocess the videos. The videos were collected using the Pupil Labs Neon eye tracking module while the test subjects were given four different tasks gazing at grid, ellipse, line and random pattern.